Following the change this week to allow users to send messages to accounts that don’t follow them (amongst other things), Twitter has also been making changes to its policies and enforcement options to ensure that Twitter users play by the rules.

The first area of change is policy; Twitter has updated its violent threats policy, to expand the prohibition against specific, direct violent threats to cover all threats of violence against others, or promoting violence against others. The previous policy wording was too narrow, and prevented Twitter from being able to lawfully act on certain undesirable behaviour. Twitter has also signalled a greater intention to act when things go too far.

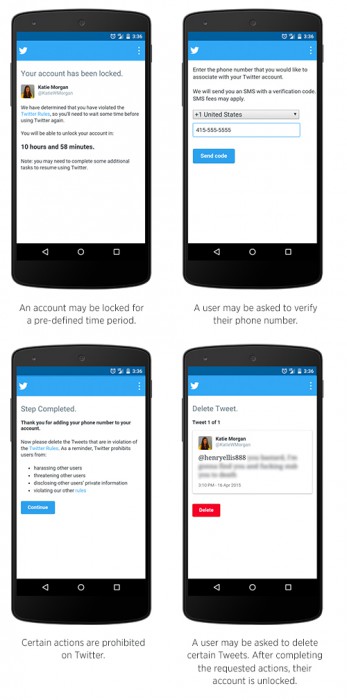

On the enforcement side, Twitter already has the ability to require users to delete offensive or policy-violating content, or to verify their phone number. However, Twitter will now use these as enforcement tools, alongside a new option that allows Twitter support staff to lock abusive accounts for specific periods. Such a tool allows Twitter the flexibility to remove, temporarily, from Twitter those accounts which may be targeting particular individuals or a group thereof. One can imagine that this might be particularly useful in response to the gang-up style attacks we’ve seen reported in the media of late. Here’s how these enforcement steps look from a user’s perspective:

Behind the scenes, Twitter is also testing a feature that will allow its support staff to identify suspected abusive tweets before they get reported, to limit their reach. The feature uses a number of inputs to determine whether a tweet might be abusive, including the age of the account, the similarity of wording to other already known abusive tweets, and proximity to other accounts sending similar content. Twitter has made the point that this will only be used for targeting abusive content, not material that is merely controversial or unpopular.

The thought process behind this is twofold; penalising those accounts (and their owners) who engage in abusive behaviour, and reducing the incentive (a large audience, impact and so on) of abusive behaviour. Think about it. If you’re going to engage in a hate-filled rant towards another user, quite likely you’re doing it for the primary purpose of hurting the other person, but also because you want others to see what you’re doing and engage in the same behaviour. Look at how the Gamergate stuff has continued to occur. If the target of your abuse can’t see it, and your abuse doesn’t get seen by a wide audience because Twitter has already picked it up and prevented it, and your account gets locked up, where’s the incentive?

This sounds like a sensible policy approach from Twitter, and hopefully their back-end has enough intelligence in it to pick up on these trends quickly, so that staff can act swiftly to stop tirades of abuse before they even start.

Ed: Of course, people could just not be arseholes to other people, but this is the Internet, and being awful to someone behind a keyboard is far too easy.