Google Assistant was a key focus of Google’s IO keynote this morning, as you would expect now that it has become core to so many of their services. This morning they talked about the next generation of Google Assistant, something that will live and act on our devices rather than the cloud, and some of the new features they’re bringing to us over the next few months.

Better, faster, on-device speech recognition

Google’s put a lot of time and research into their voice recognition efforts, and we saw earlier in the year that Gboard is now able to do this on-device with a drastically compressed voice model (what used to be 100GB is now 0.5GB).

The company is now bringing this to Assistant, allowing it to recognise you much faster (they say 10x faster) and respond to your commands quicker.

You’ll be able to use the updated Assistant to rapidly control devices in a far more natural way than we have until now. As the Assistant recognises your speech, words appear near the gesture input area at the bottom of your screen, and you can still use the “continued conversation” feature to issue follow-up commands.

Assistant will get more personal

Google’s been training the Assistant to use Personal References in the knowledge graph when servicing your requests. This means you’re able to use references like “mum’s house” and the Assistant will understand that you’re taking about a location and pick the right one for you.

You’ll also start to see personalisation in results on Smart Displays. You’ll be able to ask the Assistant questions like “what should I cook for dinner?” and it’ll display results that are based on previous recipe searches. This is called “Picks For You” and will roll out to all smart displays soon.

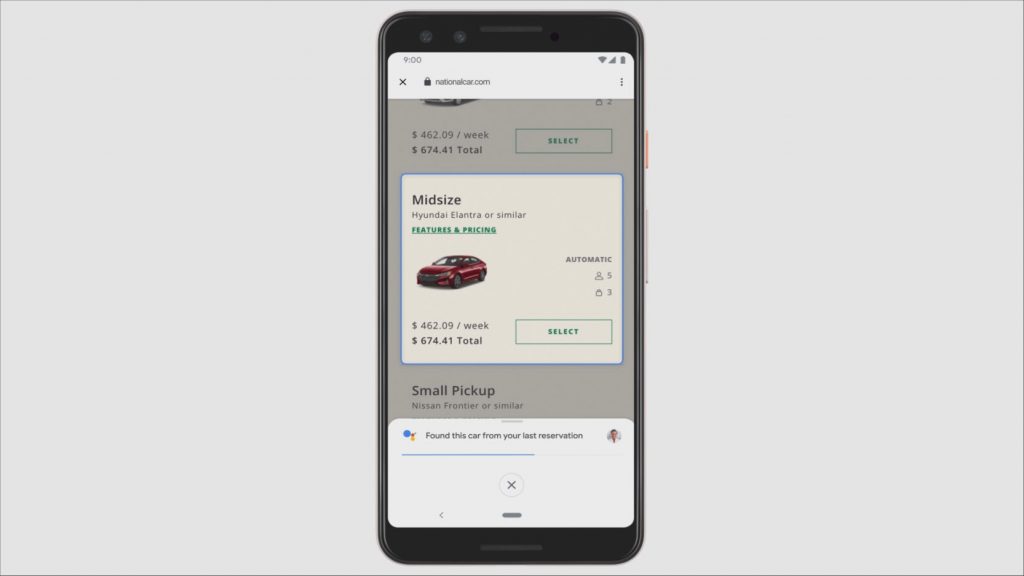

Duplex for the Web

Google wowed us with the debut of Duplex at IO last year, and this year they’re bringing the feature to the web.

You’ll be able to ask the Assistant to make bookings for things like hire cars and theatre tickets, and the Assistant will open up a web browser and navigate its own way through the booking website with a small overlay ensuring you know what it’s doing.

Note that we’re still waiting for the original, voice-flavoured Duplex service to come to Australia.

Lens Enhancements

Google also showed off some neat new features for Lens, their AI image recognition system.

Lens will now be able to reconcile a menu with a restaurant through Google Maps, and highlight popular dishes for you. It’ll also be able to show you photos of those dishes from the restaurant’s Maps listing.

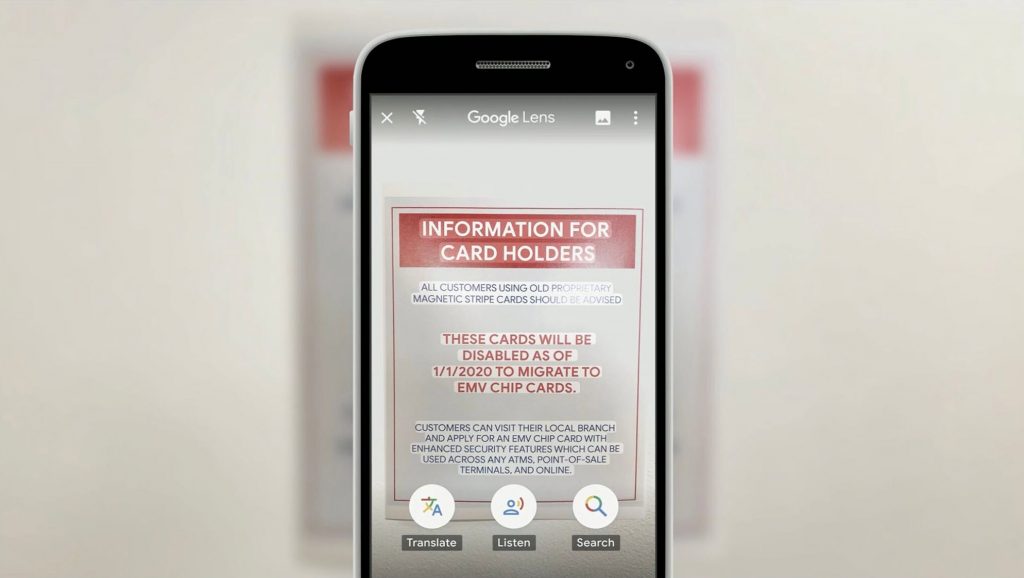

Additionally, if you point Lens at a sign it’ll be able to read it aloud for you, or translate it into your own language.

This last feature was called out specifically as a feature of the Google Go app (earmarked for developing markets with lower-spec phones), though.

Driving Mode

Later this year, you’ll be able to enter an on-device driving mode by saying “Hey Google, let’s drive”. Everything will be voice-activated, and navigation will be controlled by the Assistant with unobtrusive notifications and voice prompts for things like incoming calls.

Assistant’s Driving Mode will be available on any Android phone with the Assistant – and that’s currently over 1 billion.