The digital realm is a concern for many parents thanks to the large number of possible ways that kids can get themselves into trouble. Google is attempting to address one of these concerns with changes to the Play Store policies hoping to help those building apps for kids to target their audience even more.

After input from users and developers Google’s new policies aim to provide additional protections for kids on top of the Family Link controls that already exist. The new policies hope to ensure that apps for kids have not only appropriate content (so who decides what is appropriate for your child or not?), the ads shown are suitable, and the app handles personal information correctly. They are also designed so that apps NOT intended for kids will not be able to “unintentionally” attract them.

Every single developer on the Play Store over the next few months will be asked to consider if kids are part of their target audience:

- If kids are the target audience the app must meet policy requirements regarding content and handling of personally identifiable information.

- Ads in the app must be appropriate and served from a network with certified compliance with their families policy.

- If kids aren’t part of your target audience the app must not unintentionally appeal to them — this includes app marketing.

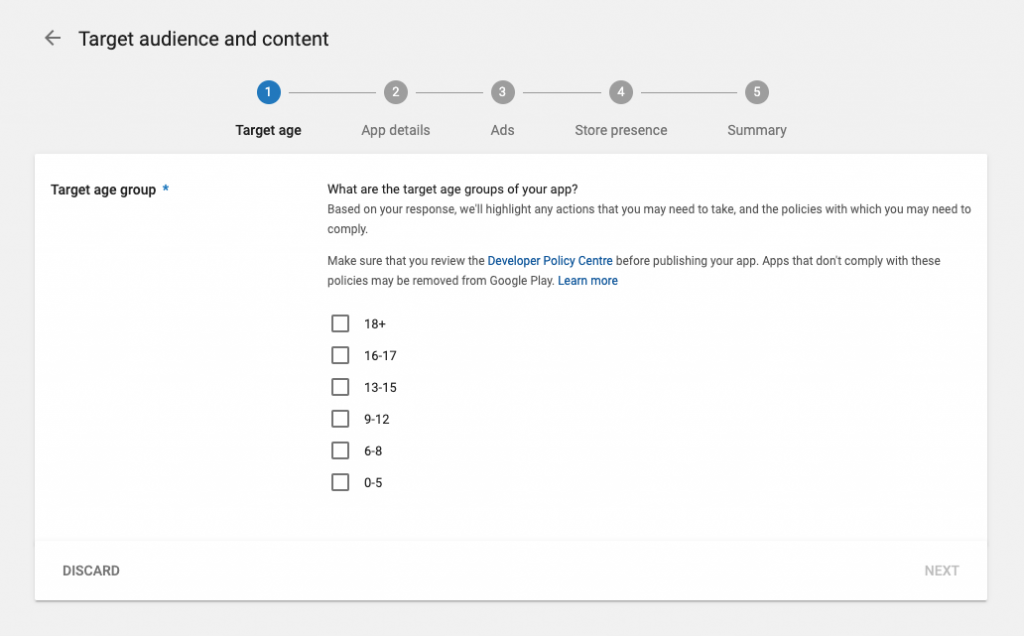

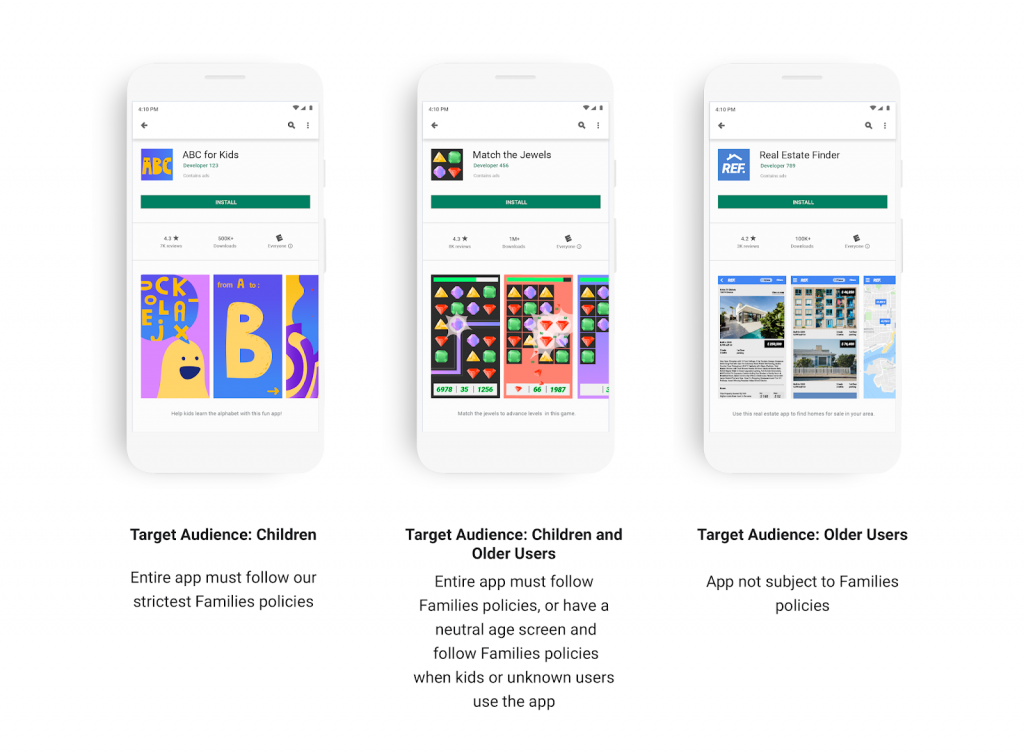

All app developers must now state the target audience of their app in their Google Play Console and if the app is designed to target kids then there will be follow up questions to make sure it complies with the new policies. Google will then use the results of the questions to categorise the app into target audiences which are: children, Children and older users, and older users.

From today the target audience and content section is available on the Google Play Console and all new apps must comply with the new policies before they will be accepted onto the Play Store. Every single app on the Play Store will need to have the target audience and content section completed and complying by September 1, 2019.

Google have increased their staffing levels to help out developers with app reviews and appeals processes (let’s face it, there will be many when a bot is in charge of the original approval process) to help developers get “timely decisions and understand any changes” that are required.

Hopefully this goes some way to helping our kids be safer online and it is just a small step and there are so many more, much larger ones, required for the online world to be safe for kids.

How do you feel about your kids being online? Why are we talking about serving the CORRECT ads to kids that young? Should they even be seeing ads?