Overnight Google dropped a bundle of updates to both Assistant’s features and programming back-end at the annual Voice Global conference. While this may not technically be a nice new splashing feature, what was released should meaningfully improve both the user experience and more importantly the ease of development.

The updates fall neatly into two categories, user-facing features and Developer updates. Let’s take a closer look at both.

User Facing updates

Media API

For the longest time, one of the most requested features for Google’s Smart Speakers and Displays has been the ability to continue long-form audio across devices. It’s long been an issue that 3rd party podcast apps couldn’t integrate playback on these devices.

Hopefully, the new Media API will allow exactly. Details available now confirm that you will be able to pick up progress between Google Assistant devices, so if you pause media in the lounge you’ll be able to continue that in the bedroom.

Hopefully, this new API can also hook into media progress across Android, iOS and Web-based players as well.

Home Storage

The new Home storage feature will allow developers to develop experiences that can save their state acres smart displays. The example given is a puzzle game, where you play on one smart display and can then continue on another.

Home Store is developed to remember the state across devices for multiple users across multiple displays. The possibilities for this update is endless. Currently, Smart Displays and Speakers are basically unaware of each other. Any updates that makes continuity across smart devices in a house will be welcome

AMP pages on Smart Displays

It would be easy to dismiss AMP on Smart Displays as simply meaning displaying existing AMP articles, which we’re sure it will do. However, there is something deeper there, AMP is, after all, a collection of web standards that can enable a wide range of functionality.

While not explicitly stated this may be a move to make the smart display a more complete computing platform. The question is with Smart displays morphing out of voice control, do we want deep interactions on these devices?

Personally I’m happy to see how the platform can use these new tools.

Continuous Match Mode

Continuous Match Mode will introduce the ability for Assistant to “leave the mic open”, AFTER a user initiates an Assistant Action. What this means is that the user can conduct a normal fluid conversation with Assistant without having to wait for the device to be ready to listen.

This will enable a more fluid and dynamic user interaction flow with the Assistant and again, we’re interested to see what meaningful uses developers can put these improvements towards.

Developer updates

With all of these new features, Google has also provided Developers with enhanced development tools to make implementing these and other features easier.

Action Builder

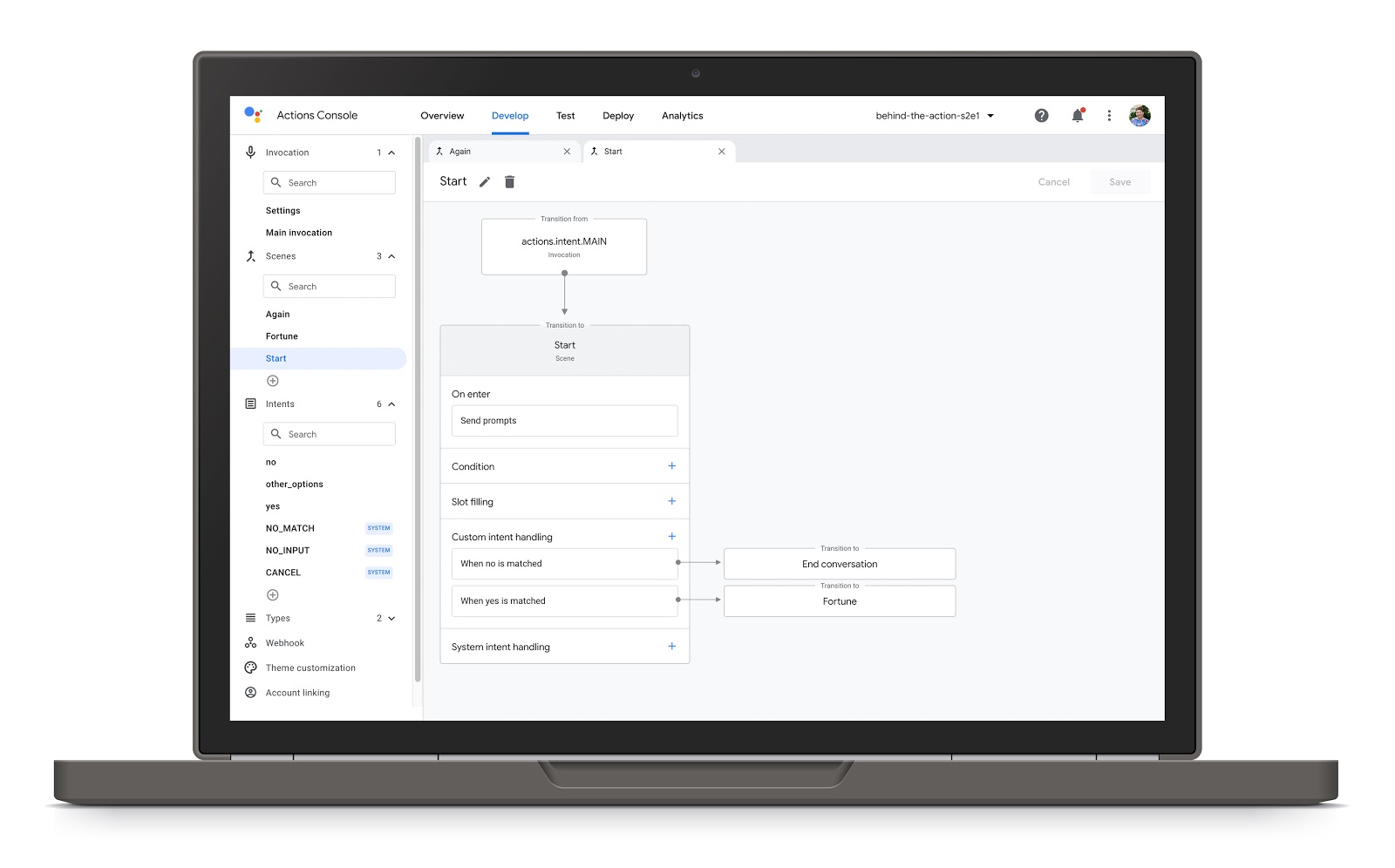

Action Builder is a new web based IDE (Integrated Development Environment) that provides developers with a graphical user interface for developing conversation flows. Similar to other drag and drop style program interfaces the tool is intended to make rapidly developing conversation flows easier, especially for those without a strong coding background.

Action Builder is fully integrated in the the Assistant Management Console the Action Console allowing streamlined development, testing and deployment of Assistant Actions.

Actions SDK

Of course not every programmer wants to use a GUI to developer their apps and services so Google has released the Action SDK that integrates with the IDE of your choice providing a command line interface for programming Actions.

The new SDK will also integrate with Action builder and the Action Console meaning that developers, or development teams can seamless work between the tools in development of the same Action.

Overall these are a great set of enhancement to both the Assistant platform and it’s associated development tools. With voice UI becoming more accepted, and smart displays finding their place in peoples lives it’s great to see new feature and tools rolling out to the platform.

Hoping the Media API can be used across to Android devices like you say. Long have I dreamed on the way home of listening to some music/podcast on my phone and then transitioning that point in time directly onto my Assistant speakers when I get home

Andrew, this is the dream for many