The online world is a wonderful place when you can easily access it, particularly with voice controls on smart devices. What if you’re not able to voice control devices though? There are plenty of people in the world who have exactly that problem through a variety of conditions and disabilities. The use of Augmented and Alternative Communication (AAC) is very common in this space, so it makes a lot of sense for Google to work with one of these providers to integrate with Assistant.

Tobii Dynavox is just one of those offerings on the market that give those without the capacity, a voice and have now taken on board Google Assistant.

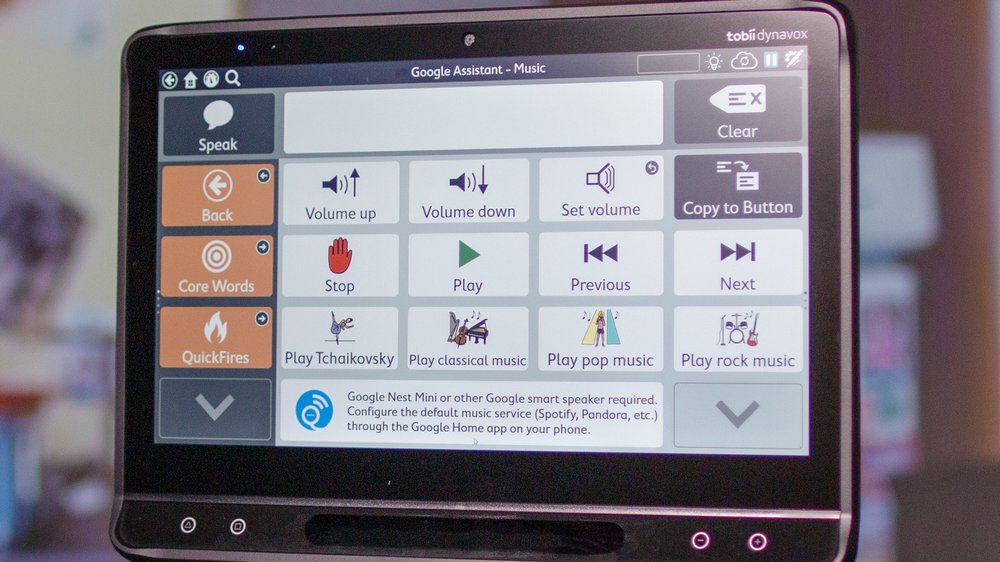

Available on Tobii Dynavox’s dedicated tablets and mobile apps—has a preconfigured set of tiles for users to communicate about everyday things and is accessible through touch, eye gaze and scanning. By integrating Google Assistant with Tobii Dynavox’s technology, Becky (a case study referenced in the Google Blog) can now easily assign a tile to a Google Assistant action, allowing her to control compatible smart home devices and appliances, like lights, thermostats, TVs and more, that have been set up in the Google Home app. Tiles can also be configured to get answers from Google Assistant to questions like “What’s the weather?” or “What’s on the calendar for today?”

While Tobii Dynavox is a hugely complex piece of equipment with support for eye gaze as well as tactile inputs, setting up the integration is very simple. You’ll need a Google account and after access is granted to the Snap Core First app, you just need to configure tiles by editing them to perform actions “Add action” and completing them with the Send Google Assistant command.

The inclusive direction of Google development didn’t stop at that integration though, Action Blocks has been further developed with a focus on non-verbal users.

The Tobii Dynavox partnership brings a significant number of options for users to utilise picture communication symbols. This also makes AAC a far more mobile option as it works on any Android device without the need for additional hardware. That additional hardware though can be used for users with a need for a physical trigger to engage with certain, daily use functions.

Clearly, Google has a strong focus on the continued growth of accessibility, so there’s undoubtedly more to come in the near future. It will be very interesting to see what they bring forward next.