For those who have hearing difficulties, Live Transcribe made quite an impact when it was launched. It gives opportunity for people with varying levels of hearing impairment to be more involved in active conversation, even in relatively noisy environments.

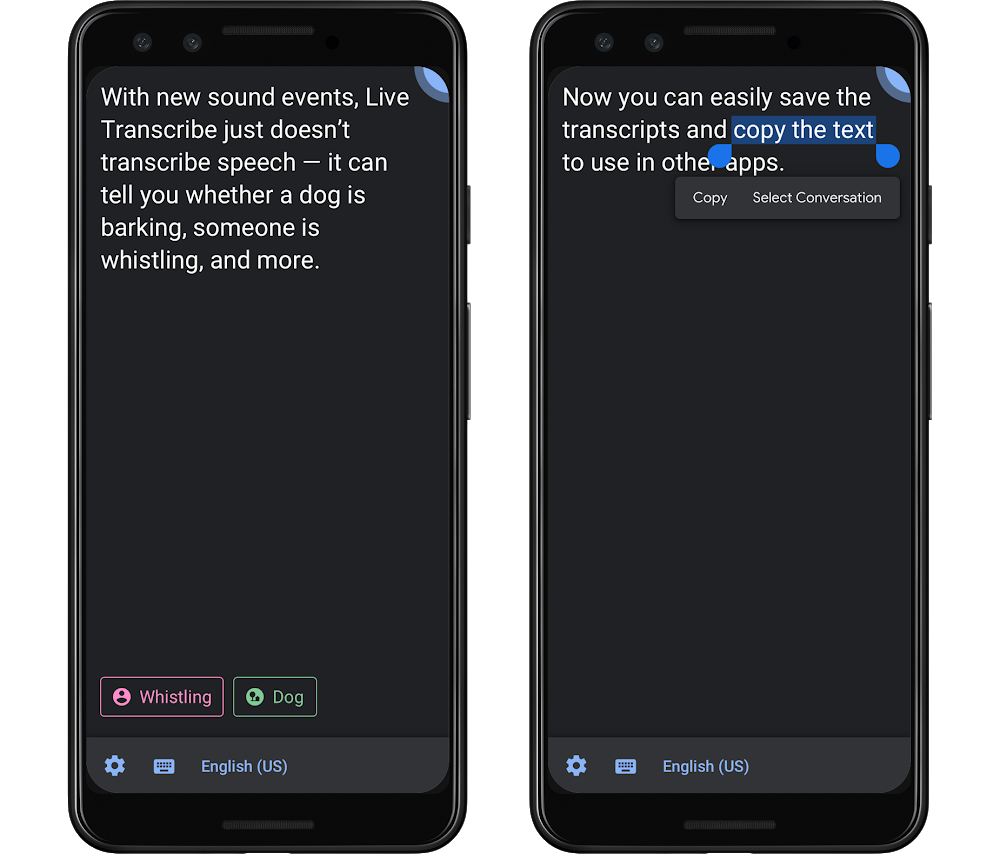

If you’ve seen it, you would have noticed that Transcribe only gathers conversation, no other description of sound events around you. This is being addressed with a significant AI driven update where nearby sound events are displayed on the screen:

You can see, for example, when a dog is barking or when someone is knocking on your door. Seeing sound events allows you to be more immersed in the non-conversation realm of audio and helps you understand what is happening in the world.

The other major functionality change in this update is the ability to save the transcription, as well as copying segments of the text which will be stored on your device for three days. Immediately this comes across as handy for referring back to a recent conversation, but noted in their blog post note the flow on effects for other demographics and professions

it also helps those who might be using real-time transcriptions in other ways, such as those learning a language for the first time or even, secondarily, journalists capturing interviews or students taking lecture notes.

Closing with acknowledgement of further work to do in the tech space to improve inclusivity is a huge step having a company the size of Google doing this work, but will (hopefully) open others eyes to the further needs in the community.