The official way to take a photo using Google Glass is to touch the side, or nod your head to wake the device, and then saying aloud ‘OK Glass, take a picture’. Not very cool or discrete (if you’re into that thing).

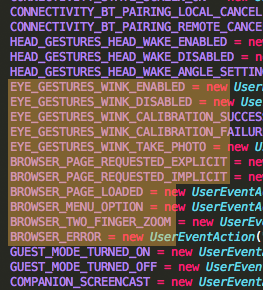

According to Reddit user fodawim, the Glass companion application contains some interesting pieces of code that suggests that Google plan to include spacial gestures to make interacting with the device easier. Examples of the gestures include HEAD_GESTURES_HEAD_WAKE (which we already knew about), EYE_GESTURES_WINK_TAKE_PHOTO, and BROWSER_TWO_FINGER_ZOOM. EYE_GESTURES_WINK_TAKE_PHOTO is an interesting idea, and I’m not sure how Glass in its current form could detect you winking, but if it makes it into the final shipping edition, it will make taking photos with Glass so much creepier. Interestingly, the code also contains an element called COMPANION_SCREENCAST, which I hope is a way to share the output of the unit’s display so that others can see what you’re seeing in real-time.

I’m not convinced that winking is the best way to take photos using Glass, and I can only imagine what other facial contortions could be used to trigger different actions, but things like pinching your fingers together in front of Glass’ camera to zoom on web pages and maps is a brilliant idea. Each story about Glass just makes wearable computing devices, like ‘smart watches‘ seem more dated and useless, and I really hope that I don’t have to wait an entire year to get my hands on a pair.

Hehehe, squint to zoom…