At their ATAP event, which promised to ‘knock your socks off’, Google has announced a new way to interact with wearables, called Project Soli.

Based on a research paper called ‘The information capacity of the human motor system’ which was written in 1954 by Paul Fitts, and re-enforced by recent research by Ivan Pouprev, Project Soli sees the small screens on wearables as the perfect platform to introduce a gesture control system.

The system is actually using a radar based system at 10,000fps at 60GHz detecting user movement giving users potentially more controls than is currently allowed by a touch screen. The system uses Range Doppler, Micro Doppler, Spectogram and IQ to capture the motions which are fed into a machine learning algorithm which interpret gestures to interpret a range of inputs like swipes, or the gesture for turning a knob or dial to be interpreted into software.

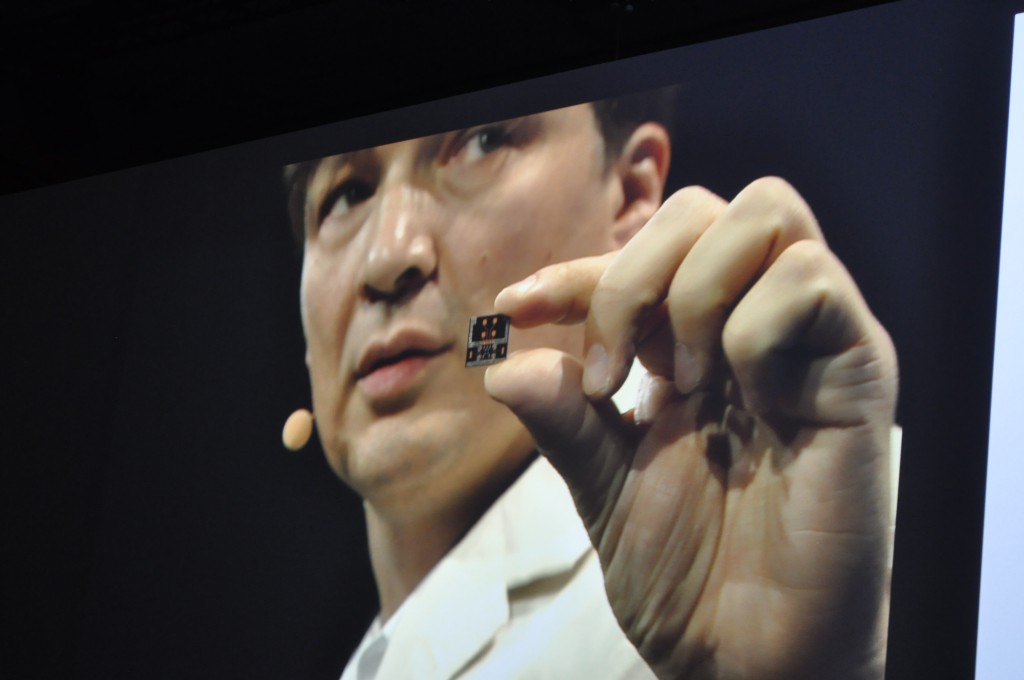

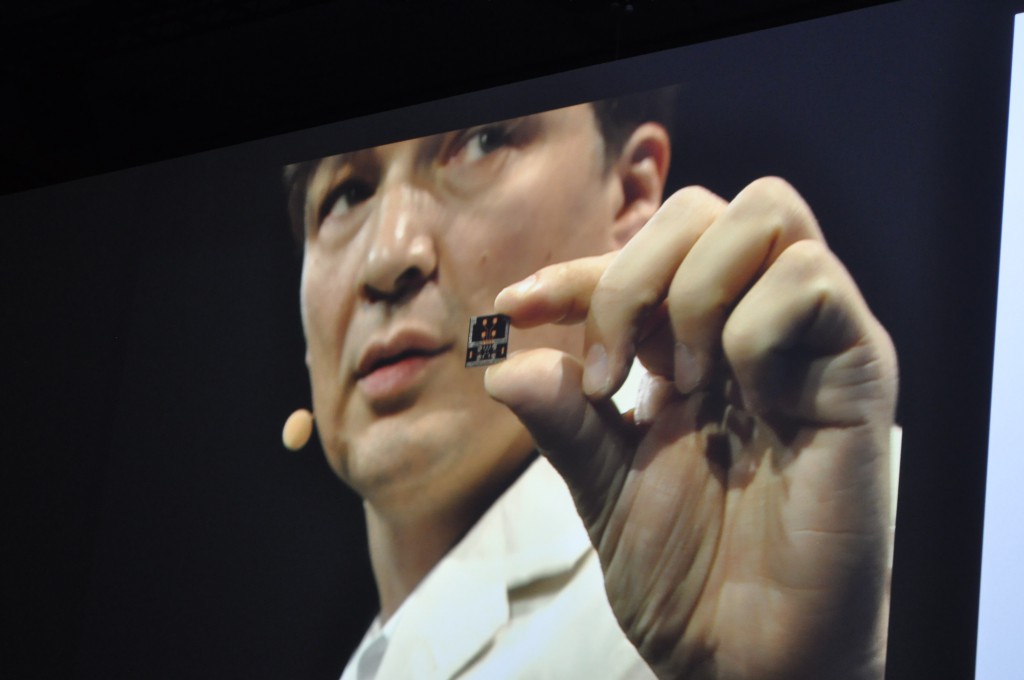

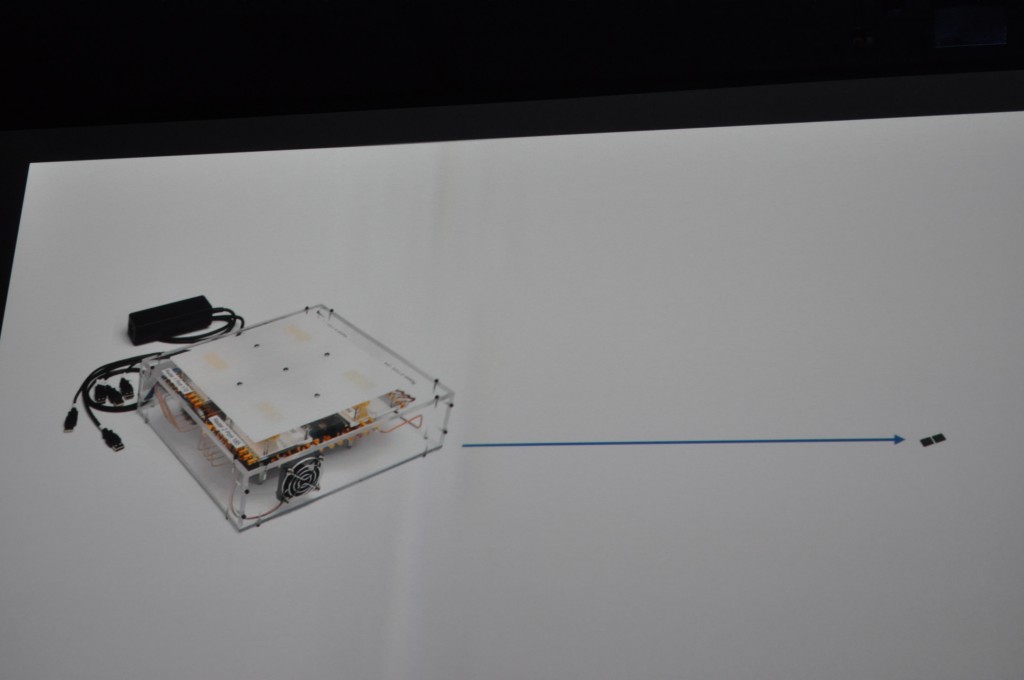

The nature of the Google ATAP group, which is lean, and in there words ‘optimised for speed’ has allowed the group to go from proof of concept the size of a small shoe box to a microSD card sized sensor in just 10 months.

The final product hasn’t been announced as yet, but more information, including a dev kit will be seeded to developers later this year.