Google has a strong history of being aware of and incorporating accessibility features into their products to facilitate people with different levels of ability to use their products. At Google I/O this week, they introduced a new app called Lookout, which hopes to help the blind or visually impaired to experience the world around them

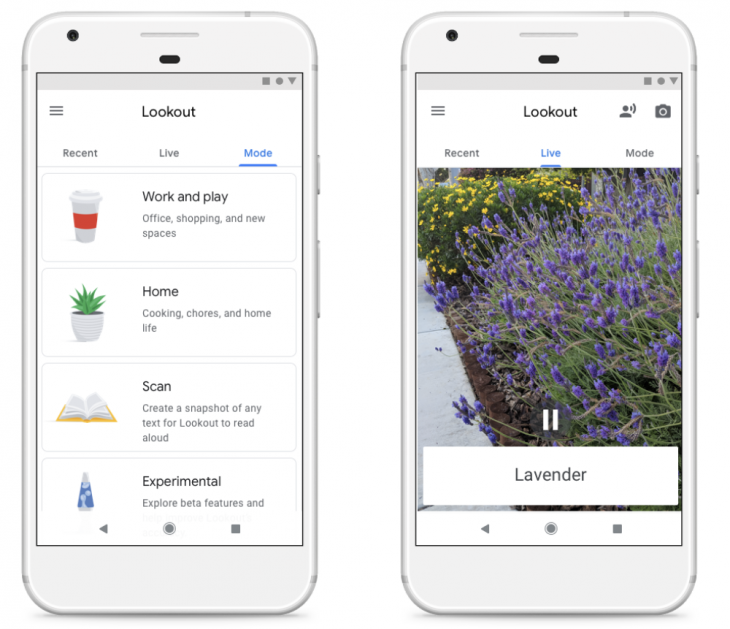

The app aims to give blind or visually impaired users more independence by giving auditory cues about objects, text and people they encounter in everyday life. The app is apparently aimed at running on the Google Pixel phones, which should be worn around the neck in a lanyard holder, or in the front shirt pocket with the camera lens pointing out so it can see the world.

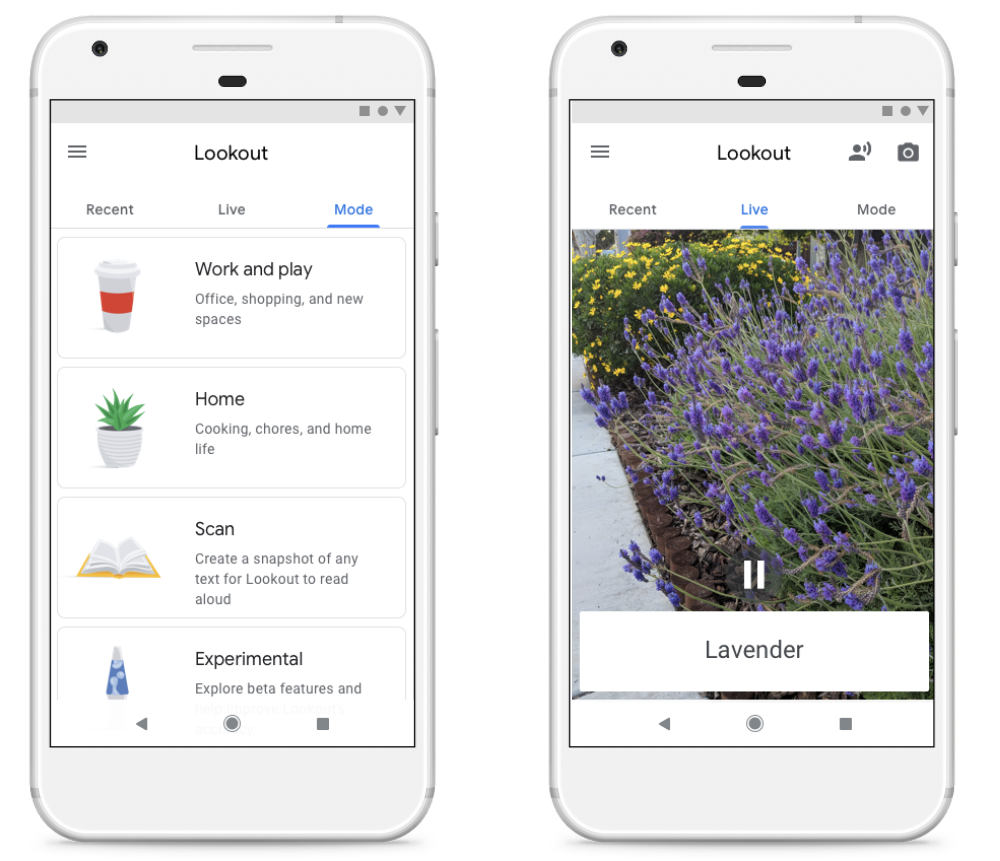

The Lookout app can be set to four modes: Home, Work & Play, Scan or Experimental, with each mode relevant to an aspect of life.

Google says the app doesn’t require an internet connection, which means it’s using on-device intelligence to interpret the visual data coming in through the camera.

Maya Scott, a theater and arts instructor who was born with a visual impairment has been using Lookout to help in her daily life:

The Lookout app is coming to Google Play in the US later this year. Google hasn’t advised if there will be expanded availability but it should