In 2019, we tend to take for granted the fact that our phones can turn spoken words into text when we’re on the go or haven’t got a free hand to enter some text. We forget though that there are people for whom this isn’t possible due to speech impairments.

Impairments that make it difficult for someone to speak also make it difficult for people to understand them, and cause speech recognition systems completely fail to recognise their voice. Communication is difficult, and all of the recent advances in voice recognition won’t benefit them because they’re trained to understand properly spoken words.

Google today devoted a section of its Google IO keynote presentation to Project Euphonia, an attempt they’re making to rectify this situation.

They’ve been working with people who have ALS (also known as Lou Gehrig’s disease), a neuro-degenerative disease that can cause sufferers to lose the ability to speak and move, and the organisations that support them.

The work has been focused on training speech recognition models to work for users with speech impairments, sometimes responding to sounds rather than words, and in some cases even responding to gestures.

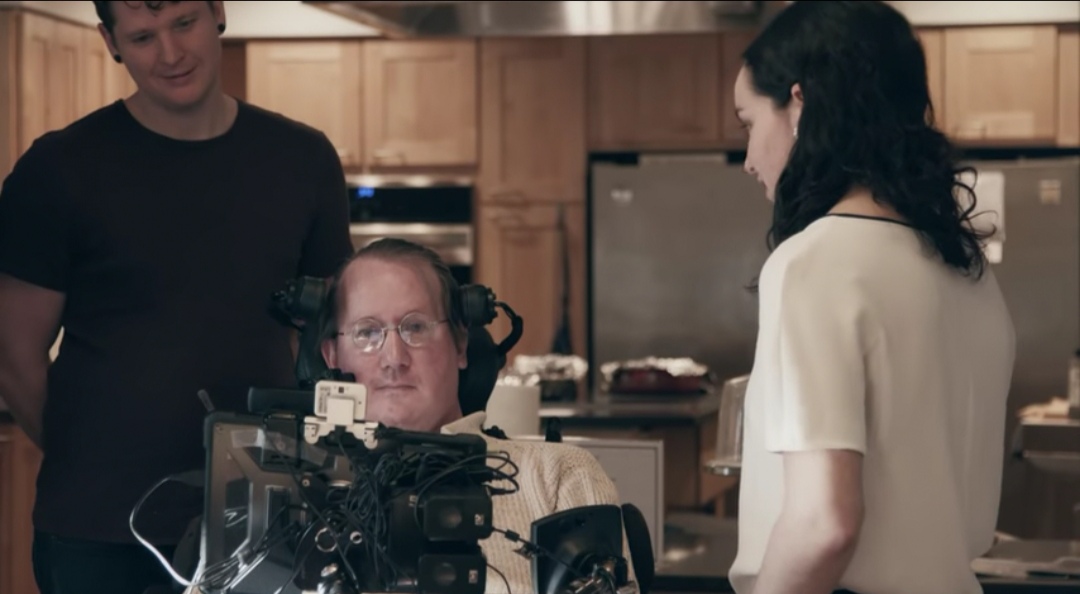

They’ve also been using custom-trained voice models to help deaf users who aren’t able to sound words correctly, like Googler Dimitri Kanevsky seen in the video below.

It’s pretty amazing to see technology we take for granted extended and applied in these ways. These people are now able to use voice control features like voice to text input on their devices, or issue commands to Google Home. They’re able to participate in conversations like never before.

Dmitry spoke 15,000 phrases to train his voice model. It’s a lot of work, but it’s been quite successful as demonstrated in the video. The goal of course is to help everyone in these situations, and so the company is asking users with speech impairments to contact the Project Euphonia team via this form and submit voice samples to help them train their voice recognition model.