The Google Pixel 4 arrived with a dedicated radar chip designed to bring their Project Soli to fruition. Project Soli was reborn with the new moniker Motion Sense and while it was extremely limited at first (and buggy) it has improved over time as Google have added more functionality to it — albeit a little bit.

Motion Sense is used to aid the Google Face Unlock experience and pretty much to control music and that’s it (aside from killing the battery). Google is hoping to aid developers to improve their new feature by releasing the Soli Sandbox app.

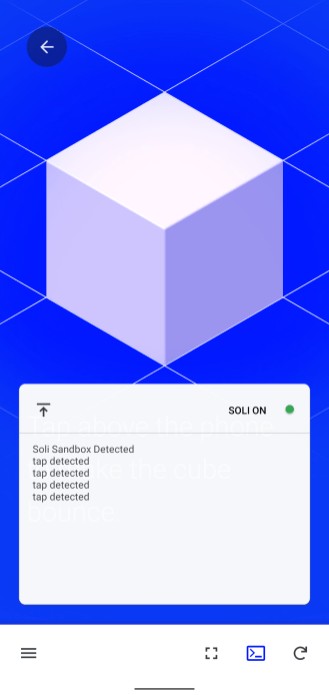

The app is not a tool for creating Motion Sense projects but a tool for testing the air gestures on their phone in real life. It is a way for developers to connect their “web prototypes to Soli interactions on Pixel 4.”

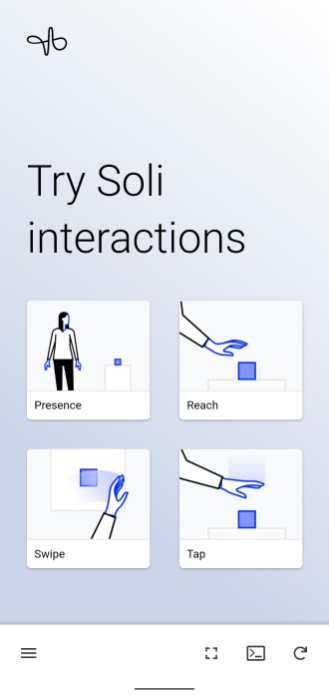

The app looks to be very basic with it including four gestures and four prototypes only with developers’ projects able to be accesses via URL or local files on the device.

- A presence event is sent any time Soli detects one person within -0.7 meter (2.3 ft) of the device.

- A reach event is sent any time Soli detects a movement that resembles a hand reaching toward the device, within about 5-10cm.

- A swipe event is sent any time Soli detects movement that resembles a hand waving above the device, similar to opening a curtain in either direction.

- A tap event is sent when Soli detects a movement that resembles a single hand bounce above the centre of the phone. A tap feels similar to dribbling a basketball.

The app is limited in its availability and is only available in countries where Motion Sense is enabled — Australia is one of them. Hopefully this will encourage developers to bring more functionality to Motion Sense with many users disappointed with its current form.

To me the big take away is that Google releasing this now shows that they are not going to let Motion Sense die off after a single device integration so we should expect it to arrive on the Pixel 5 belying rumours otherwise.

They do this yet their next phone won’t have it?