During the Google I/O Keynote earlier, it was announced that there are some innovative new features coming to the Nest Hub Max. ‘Look and Talk’ is exactly what it sounds like, opt into this feature and the face match and voice match recognition features allow you to simply look at the screen and ask for what you need – no activation phrase necessary. Additionally, any video generated during these interactions is processed on the Nest Hub Max itself and isn’t shared with Google or anyone else.

For this kind of technology to work Google has needed to implement some very interesting software. Six machine learning models process 100 input signals from the camera and the microphone – things like head orientation, lip movement, your proximity to the device – and does it all in real time.

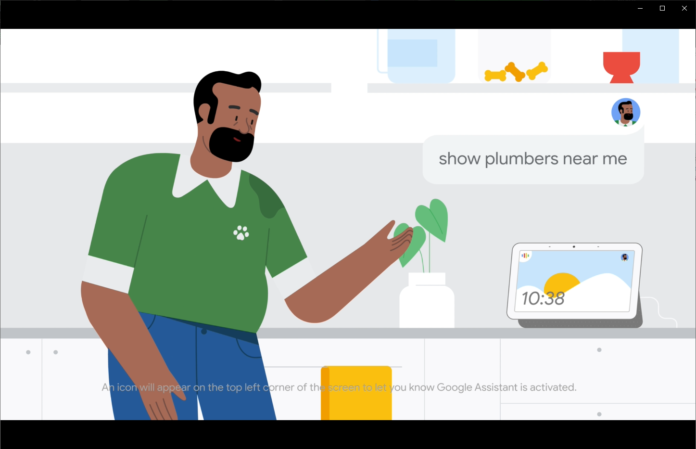

Another addition to the Nest Hub Max is the expansion of quick phrases. Again it’s an opt in service, but should you choose to, you’ll be able to select phrases that will work when your voice match is recognized without the need for calling out “Hey Google” first, simply call out ‘what time is it’ or ‘turn on the lights’.

Thanks to the new Tensor chip the Nest Hub Max is going to be able to understand more conversational style speech. The Tensor chip supports machine learning which is going to allow the Nest Hub Max to be able to cope with ummm’s or pauses to remember the name of the band that was just on the tip of your tongue, making your interactions with the device closer to a conversation you might have with another person.

Currently these new features are only available on the Nest Hub Max and for US users, however, features such as these usually do get rolled out worldwide, so keep an eye on your updates!