Nvidia is currently kicking off CES 2015 in Las Vegas, already announcing their latest mobile computing platform, the Maxwell based Tegra X1. Nvidia is also announcing some new technology for cars, announcing two technologies based on the Tegra X1, the DRIVE CX cockpit computer, and the DRIVE PX Advanced Driver Assistance System (ADAS).

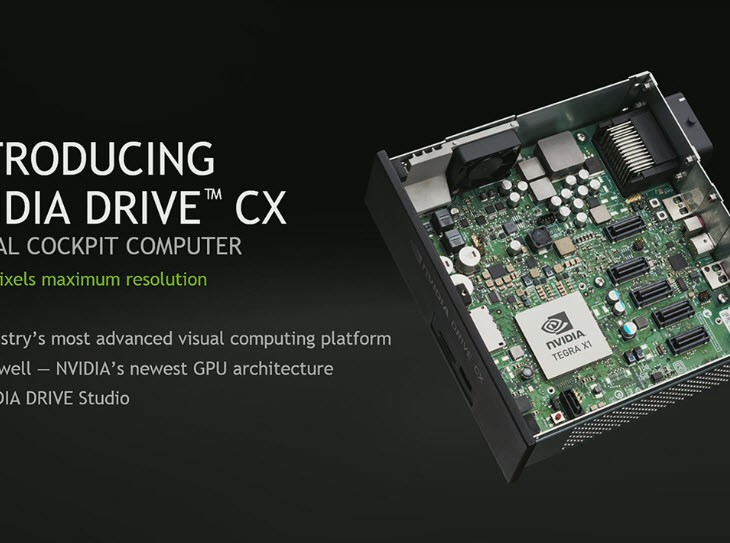

DRIVE CX

The DRIVE CX Cockpit computer is aiming to replace the internals of your car’s in-car entertainment system as well as the onboard cockpit, offering drive displays such as your speedometer/tachometer etc allowing for skinned displays, as well as the internal entertainment and GPS/video on your cars entertainment system. This will include heads-up displays, mirrors, even the rear screens on the head-rests for passengers.

The DRIVE CX system uses the Tegra X1 processor announced this afternoon, which includes a 64-bit Octa-Cpre processor with 256 GPUs, which has the ability to ‘process nearly 17 megapixels, equivalent to two 4K screens’.

But it’s not just hardware, Nvidia’s DRIVE CX uses DRIVE Studio which is compatible with a multitude of software options, from QNX through to Linux and Android. Nvidia has a developer program that works with car makers.

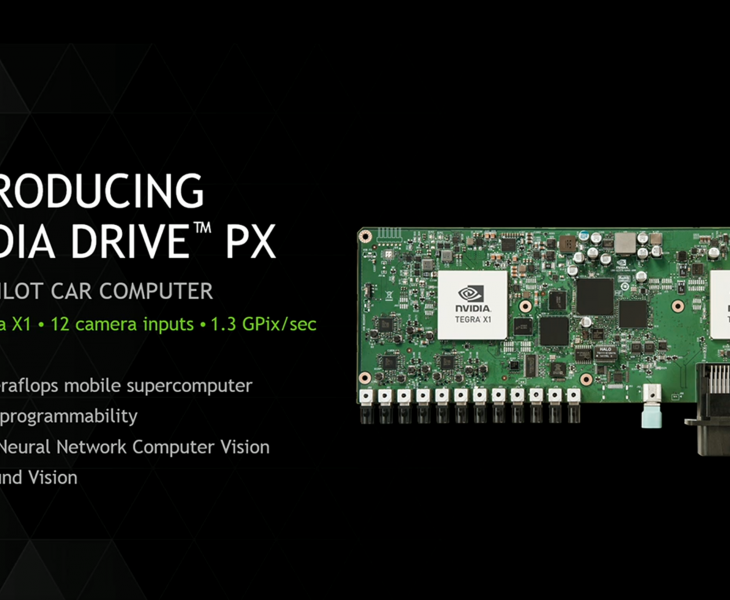

DRIVE PX

Nvidia has also announced a further move into car automation or driver assists, with their DRIVE PX Advanced Driver Assistance System. DRIVE PX is a powerful system – think the ability to recognise objects and your surroundings using high-resolution cameras.

The DRIVE PX system is also using the Tegra X1 processor announced, but uses a dual-Tegra X1 setup offering up to 2.3 Teraflops of processing power, including CUDA programability and up to 12 camera inputs. The 12 camera inputs, will be used to power the ‘Deep neural network’ which will process information that’s captured on the cameras in real time. These cameras, Nvidia envision will eventually replace the current Ultra-sound and Radar sensors used on your car to currently enable things like parking assist.

The DRIVE PX system is fully programmable, it can be trained to recognise objects and will then recognise them as it sees them in real time. The cameras and DRIVE PX system will be able to recognise objects and contexts, including traffic lights, crosswalk signs and also be able to differentiate between different vehicle models, as well as differentiating between a vehicle coming up behind you and an emergency services vehicle with lights blazing that you need to get out of the way of.

The whole concept of the deep neural net will allow for a lot of on the fly learning and sharing of information throughout the Nvidia DRIVE PX fleet. According to Nvidia :

It’s not clear at this stage if Android will be used as the OS, and we’re still hearing about smaller details about both systems from Nvidia, as well as from one of their car maker partners: Audi. It appears that if you’re in the market for a new car with a DRIVE CX or DRIVE PX system, an Audi may be the way to look in the future.

Hmm, recognise and detect cop cars, speed cameras, radar traps, etc. and you’ve got a winner on your hands. Wide angle dashcam for normal oversight, and a telephoto mode for looking down the road and spotting trouble ahead.

Let’s see it in an add on unit though.