Dual cameras. Everyone is doing it it seems but do we really need to? Google don’t think so and have bucked the trend and have included a single camera on the rear of their second generation Pixel phones.

Despite only having a single lens on the back of the Pixel 2, Google have once again topped the DxOMark smartphone list with a score of 98. They still have some gimmicks usually saved just for dual cameras such as portrait mode, all with a single lens. So how did Google do it? Nat, the Googler who uses her 20% time to tell the world about different projects at Google, decided to dig into it and produced a video that details why the camera is so good.

If you watch the entire video through it gives some great insight into how Google manage to produce what others can only do with dual cameras using their single lens camera.

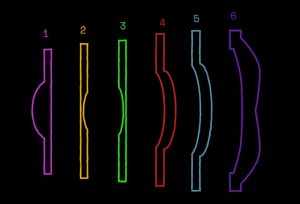

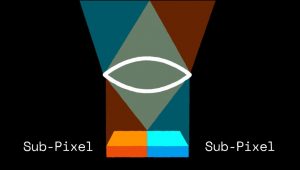

It all begins with there being six lenses in the camera to correct for aberrations that cause image distortion. Then there is Optical Image Stabilisation (OIS) which is new to the Pixel line, as well as Electronic Image Stabilisation (EIS) which was on last years model. Then there is the sensor. Although it is a 12MP sensor, each pixel is split into left and right which gives the camera new capabilities to gauge depth of field and auto focus.

Once the image is captured it is processed through 30 to 40 post processing steps. There is a physical colour filter which allows sensors to collect certain colours each (ie. just red, just green or just blue) which are then combined to make the colour image. This process is called de-mosaicing.

This is followed by many other post processing steps including gamma correction, white balance, denoise and sharpening. This is all done in the software and is called computational photography; advanced algorithms that “super-power image processing”.

Computational photography enables HDR+ mode and the new Portrait Mode.

HDR+ is an algorithm that takes a small sensor and makes it act like a big sensor. This results in great low light performance and a high dynamic range and allows you to capture dark and bright things on same image. There is also a post-processing step that check to see if there is any movement in the number of images used to create a single HDR+ image. If there is movement the algorithm discards that section of the photo.

Portrait Mode is where Google get really technical and apply their love for all things machine learning. It combines machine learning with depth mapping. For the Pixel 2 camera Googlers used a nueral network of around a million examples of photos of people to teach the software to recognise what things are foreground and what are background. This is used to create a mask and the outside of the mask is blurred.

The mask is also combined with the depth map that is created by the use of the split sub-pixels in the sensor. This gives a sharp foreground subject and a blurred background. It works on objects as well as people and works on both the front and rear camera. As it involves machine learning it will get better the more you and others use it.

The YouTube video also runs through some of the various testing procedures that a phone camera goes through to get it production-ready. You can watch the videos below and they are a good watch. The second video is shot entirely on a Pixel 2 and shows the possibilities of the Pixel 2 camera. The examples in it are amazing with an amazing depth of colours. Check it out for yourself.

Are you sold on Google’s use of a single rear camera? Would you still prefer a dual camera and if so why? What are Google missing out on?

Mind blowing how much work goes into making a camera function, let alone have the results turning out good.

The video shot by the Pixel 2 looks incredible. Amazing how good smartphone cameras are now.